Enhanced Online Study Experience

To support a research project, I was tasked with developing an application from scratch that integrates a survey and a chat window in a side-by-side view.

Team: Full team composition and roles for the research project are detailed in the publication. All work presented in this case summary was completed by me, with feedback and comments from the supervisor of the project.

Tasks Completed

- Wireframing

- Full-stack web development

- Online pilot testing with (n=25) participants

- Executing an online research study with (n=256) participants

- Reporting the system configuration for a scientific publication:

Fernandes, D., Villa, S., Nicholls, S., Haavisto, O., Buschek, D., Schmidt, A., Kosch, T., Shen, C., & Welsch, R. (2025). AI makes you smarter, but none the wiser: The disconnect between performance and metacognition. Computers in Human Behavior, Vol. 175. https://doi.org/10.1016/j.chb.2025.108779

Artifacts

- The study frontend built with React and styled with NextUI & TailwindCSS

- A Python proxy server that handled queuing requests, communication with the OpenAI API and our database

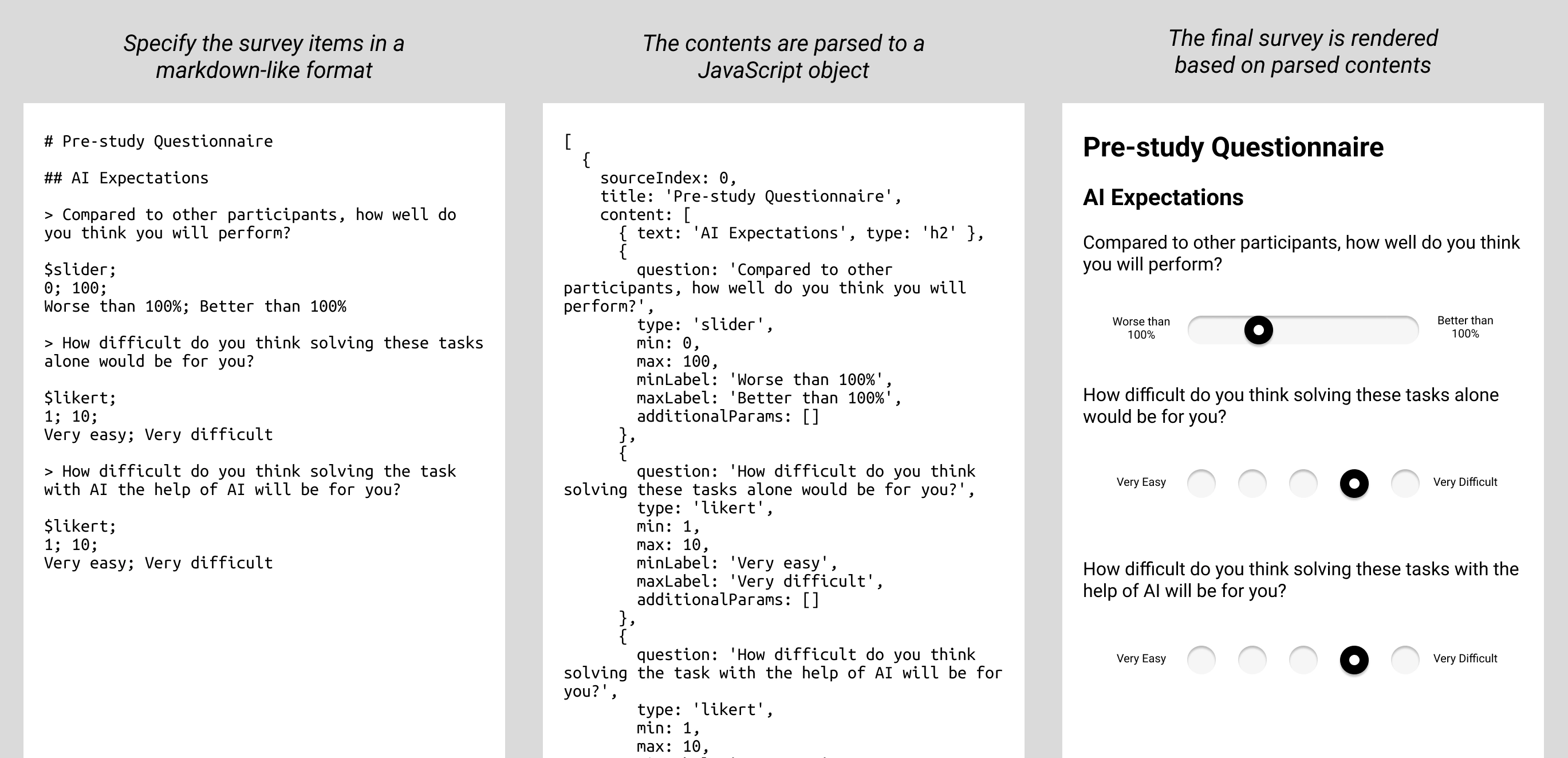

- An assistive script for turning human-readable markdown-like text to JSON survey data to be rendered

Backround

Generally, additional steps that take participants away from the view in an online study risk data loss due to participants not following the instructions carefully enough (requiring the data to be discarded) or incomplete responses due to distractions or frustration with other services.

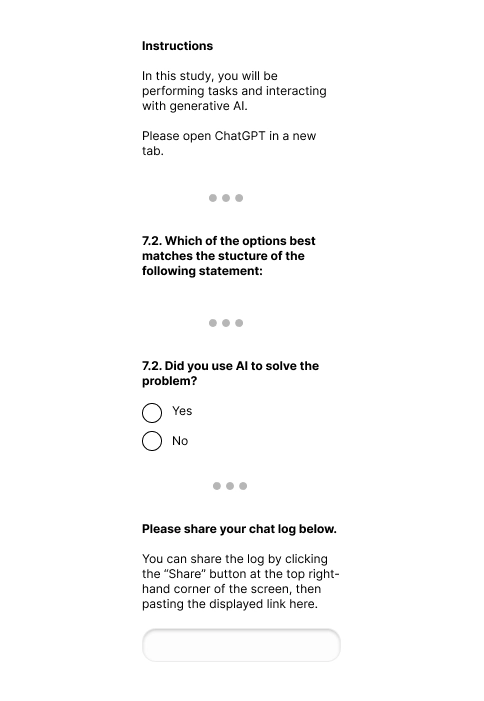

Our study investigated participants’ interactions with conversational AI. Before I joined the project, an initial pilot study had been conducted in Qualtrics, with participants having to navigate to ChatGPT on their own and downloading and uploading their chat logs at the end of the study.

A study setup like in this example might seem enticing to a researcher, as it requires very little technical configuration, can be performed using essentially any survey platform, and lowers the cost of running a study as participants will be using their own ChatGPT accounts. However, from a participant’s perspective, requiring the use of a third-party service (that they might have to register for) or navigating between browser tabs could be seen as tedious and increase the threshold of participating in a study.

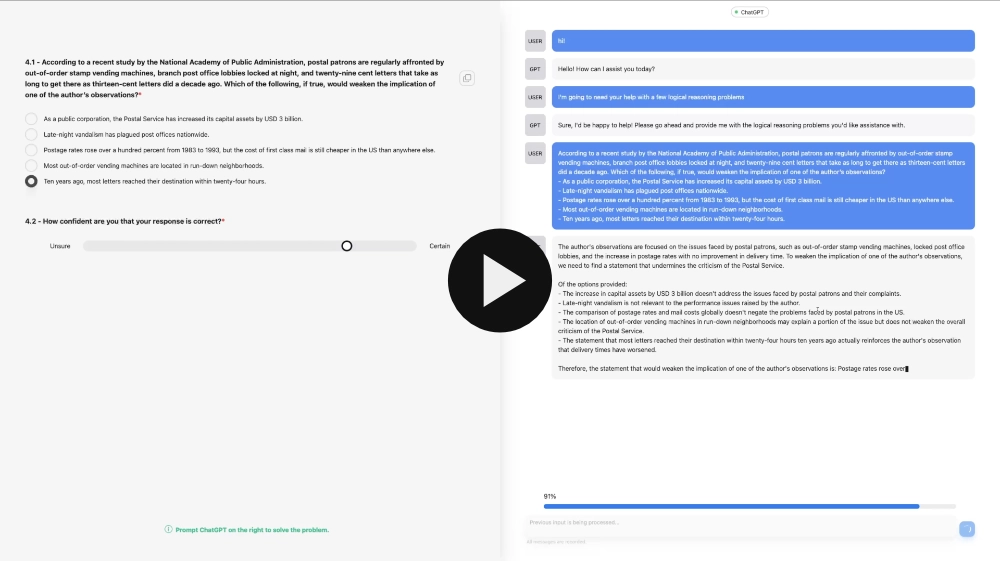

This is emphasized by certain tasks the participants are given during study: if the task is to solve verbal problems using the AI, each problem would: require selecting the problem text, answer options, moving to a second tab, pasting, and maybe editing the prompt. Moreover, because there is no connection between the survey and the chat interface and participants self-report their AI use, the data is inherently unreliable: some participants might simply pass up on using the chatbot, resulting in research data useless to investingating AI interactions.

Finally, a study conducted this way is dependent on participants correctly sharing their chat interactions. While this might be a trivial task to most participants, when doing research with limited budgets, every participant’s data counts — especially that of those less technologically proficient. Moreover, a link to a chat log on the chat service provider’s server is subject to the service provider continuing to provide its availability.

Designing a Solution

I was tasked with designing and developing a study experience where the participants could both fill in a survey and use ChatGPT on the side, without the need for additional account registrations, switching between windows or downloading and re-uploading chat logs. After quick initial sketches and wireframes to determine that I was on the same page, I began developing the system.

The next phase was heavy on software engineering as the application was developed from scratch. I chose React as the front-end framework due to its maturity, structure and my prior experience with it. To expedite the prototyping process, I opted to use TailwindCSS alongside NextUI components. On the server side, I opted for Python + Flask; The server could be fairly simple as it was mostly acting as a proxy between the clientside application and the OpenAI API, as well as our MongoDB database.

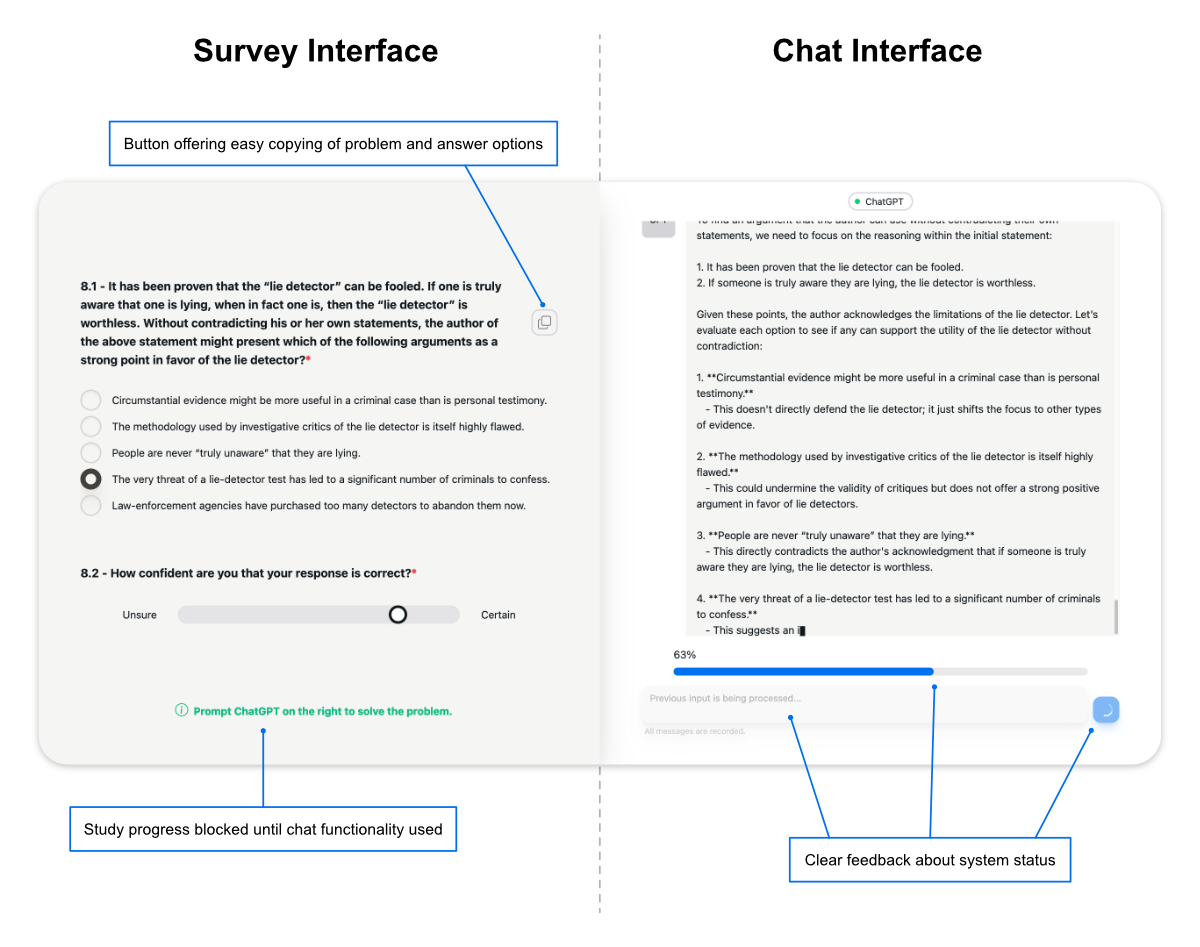

The designs of both survey and chat interfaces had largely been established at this point, so there was no need to reinvent the wheel. On the contrary, using a familiar design language would enable participants of all backgrounds to take part in the study. The survey side ended up being quite run-of-the mill with textfields, radio buttons and sliders spread over a number of pages. For the chat window, instead of emulating the “techy” feeling of AI assistants, I opted for a more neutral style resembling messaging applications. Visual neutrality was also important in order not to influence participants.

Some quality-of-life features were included. First, as the study involved participants prompting the chatbot with specific information, I added a button to some of the questions that could be used to copy text over. To indicate the chat system’s status, I also decided to include spinners to indicate processing, as well as a progress bar indicating response progress.

After constructing the prototype, we ran a new pilot study. UEQ-S insights gained from the pilot indicated that participants found the user experience both practical and enjoyable. Most importantly, when it came time to execute the main study, the application performed its duty and enabled gathering robust data from hundreds of online participants.

On the researcher experience side, Qualtrics’s WYSIWYG questionnaire editor is arguably difficult to beat. To make editing the survey items for our custom solution less dependent on programming ability, I produced a simple parser script that enables researchers to write the questionnaires in a markdown-like text format.